Why AWS, Microsoft and Ali Started to Embrace OAM under the trend of serverless?

Recently, the AWS ECS team released an open source project called Amazon ECS for Open Application Model on the official GitHub, and more and more manufacturers are beginning to explore the implementation of OAM. What is the charm of OAM, allowing multiple cloud vendors to unite and embrace together?

Serverless and AWS Permalink

The word Serverless was first used in a 2012 article called “Why The Future of Software and Apps is Serverless”. However, if you really go to archaeology, you will find that the content of this article is actually the software engineering concept of continuous integration and code version control. Let’s talk about the “scale to zero” and “pay” mentioned by Serverless today. “as you go”, FaaS/BaaS, these things are not the same thing at all.

In 2014, AWS released a product called Lambda. The design concept of this product is simple: it believes that cloud computing is ultimately to provide services for applications, and when users want to deploy an application, it only needs to have a place to write their own programs to perform specific tasks, and don’t care about this. On which machine or virtual machine the program is running.

It was the release of Lambda that allowed the “Serverless” paradigm to be raised to a whole new level. Serverless provides a brand new system architecture for deploying and running applications in the cloud, emphasizing that users do not need to care about complex server configurations, but only need to care about their own code and how to package the code into a cloud computing platform that can be hosted” Runnable entities”. After the subsequent release of a series of classic features, such as expanding application instances based on actual traffic, and charging based on actual usage instead of pre-allocated resources, AWS gradually established the de facto standard in the serverless field.

In 2017, AWS released the Fargate service and extended the concept of Serverless to container-based runnable entities. Soon this idea was followed up by Google Cloud Run and others, opening the “next generation” container-based Serverless runtime upsurge.

Serverless and Open Application Model (OAM)? Permalink

So what does Open Application Model (OAM) have to do with AWS and Serverless?

First, OAM (Open Application Model) is a set of application description specifications (spec) jointly initiated by Alibaba Cloud and Microsoft and jointly maintained by the cloud native community. The core concept of OAM is: “application-centric”, which emphasizes that R&D and operation and maintenance are coordinated around a set of declarative, flexible and extensible upper-level abstractions, rather than directly using complex and obscure infrastructure layer APIs.

For example, for a container-based application that uses K8s HPA for horizontal expansion, it will be defined by the following two YAML files under the OAM specification.

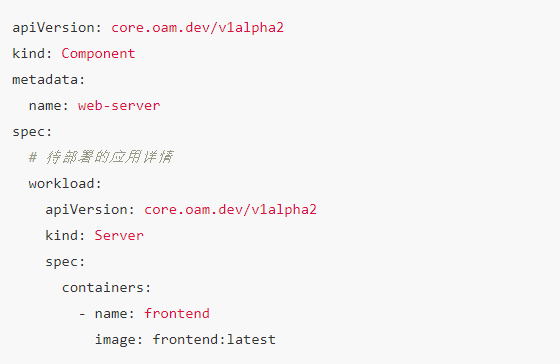

The YAML files written by R&D:

The YAML files written by DevOps:

It can be seen that under the OAM specification, the focus of R&D and operation and maintenance are completely separated. R&D and operation and maintenance only need to write a very small number of fields that are related to themselves, instead of complete K8s Deployment and HPA objects, you can easily define and publish applications. This is the benefit that “upper abstraction” brings to us.

After the YAML file like the above is submitted to K8s, it will be automatically converted into a complete Deployment and HPA object by the OAM plug-in and run.

Since OAM standardizes a series of cloud-native application delivery definition standards such as “application”, “operation and maintenance capabilities”, and “release boundary”, application management platform developers can use this specification to describe each application through a more concise YAML file. Various application and operation and maintenance strategies, and finally map these YAML files with the actual resources (including CRD) of K8s through the OAM plug-in.

In other words, OAM provides a de facto standard that defines “upper abstraction”, and the most important role of this “upper abstraction” is to prevent various infrastructure details (such as HPA, Ingress, container, Pod, Service) Etc.) Leak to R&D students, bringing unnecessary complexity. Therefore, once OAM is released, it is known as the “magic weapon” for developing the K8s application platform.

But more importantly, this idea of ”stripping the details of the infrastructure layer and providing developers with the most friendly upper-level abstraction” from the application description coincides with the idea of Serverless “de-infrastructure”. More precisely, OAM is inherently serverless.

Because of this, once OAM was released, it received a lot of attention in the serverless field. Of course, AWS is also indispensable.

The ultimate experience - AWS ECS for OAM Permalink

At the end of March 2020, the AWS ECS team released an open source project called Amazon ECS for Open Application Model on the official GItHub.

This project is an attempt by the AWS team to support OAM based on Serverless services. The underlying runtime of this project is the Serverless container service we mentioned earlier: Fargate. And the experience that this AWS ECS for OAM project brings to developers is very interesting, let’s take a look.

There are three steps to the preparation, which can be done all at once:

The user needs to have the authentication information of the AWS account locally, which can be generated by one-click through the AWS official client aws configure command;

Compile the project, and then you can get an executable file called oam-ecs;

You need to execute the oam-ecs env command to prepare the environment for your subsequent deployment. After this command is over, AWS will automatically create a VPC and corresponding public/private subnets for you.

After the preparation work is completed, you only need to define an OAM application YAML file locally (such as the helloworld application example mentioned above), then you can use the following one-line command to put a complete application with HPA in Fargate Deployed on the Internet, and can be directly accessed on the public network: oam-ecs app deploy -f helloworld-app.yaml

Pretty easy, right? In the official documentation of the AWS ECS for OAM project, it gives a more complicated example, let’s explain it.

The application we are going to deploy this time consists of three YAML files, which are still divided into R&D and O&M concerns:

R & D’s responsible Permalink

server-component.yaml: The content in this file is the first component of the application, which describes the application container we want to deploy;

worker-component.yaml: The second component (component) of the content application in this file, which specifically describes a cyclic job that is responsible for checking whether the network in the current environment is unblocked.

O&M’s responsible Permalink

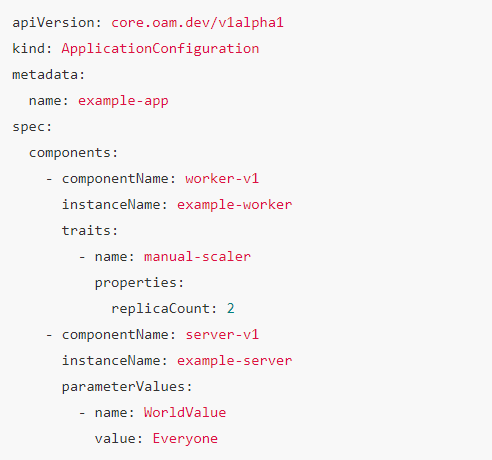

example-app.yaml: The content in this file is the complete application component topology and the operation and maintenance characteristics of each component (traits). It specifically describes a “manual-scaler” operation and maintenance strategy, which is specifically used To expand worker-component.

Therefore, the above example-app.yaml, which is the complete application description, is as follows:

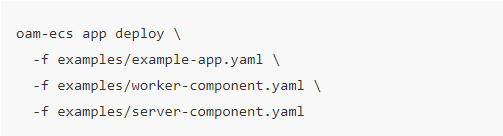

As you can see, it defines two components (worker-v1 and server-v1), and the worker-v1 component has a manual scaling strategy called manual-scaler. The deployment of the above-mentioned complex applications is still done with one instruction, which is very simple:

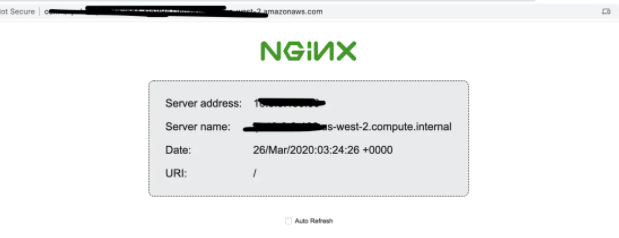

After the above instructions are executed (the students in China may need a little patience due to special network problems), you can view the access information and DNS name of the application through the oam-ecs app show command. Open the browser, enter this access information, you can directly access this application, as shown below:

And if you want to modify the application configuration, such as update the mirror or modify the value of replicaCount, then you only need to modify the above YAML file and then re-deploy. It is a completely declarative management method.

In fact, if the above operations are done through AWS Console, at least 5 cloud product pages need to be jumped to each other for various configurations; or, you need to learn CloudFormation syntax and write a very, very long CF Files in order to pull up all the resources of the Fargate instance, LoadBalancer, network, DNS configuration, etc. required for the application to run.

However, through the OAM specification, the above definition and deployment application process not only becomes extremely simple, but also directly converts the original process-oriented cloud service operation into a more concise and friendly declarative YAML file. The specific work required to implement the OAM specification is actually only a few hundred lines of code.

More importantly, when serverless services such as AWS Fargate are combined with developer-friendly application definitions such as OAM, you will truly feel: it turns out that this simple, refreshing and extremely low mental burden is Serverless The ultimate experience for developers.

Finally - when the application model meets Serverless Permalink

The OAM model has aroused a huge response in the cloud native application delivery ecosystem. At present, Alibaba Cloud EDAS service has become the industry’s first production-level application management platform based on OAM, and will soon launch the next-generation “application-centric” product experience; in the CNCF community, well-known cross-cloud application management and The delivery platform Crossplane project has also become an important adopter and maintainer of the OAM specification.

In fact, not only AWS Fargate, but all serverless services in our cloud computing ecosystem can easily use OAM as a developer-oriented presentation layer and application definition, thus simplifying and abstracting the originally complex infrastructure APIs. The originally complicated process-based operation is upgraded to a Kubernetes-style declarative application management method with one click. More importantly, thanks to the high scalability of OAM, not only can you deploy container applications on Fargate, you can also use OAM to describe functions, virtual machines, WebAssembly and even any type of workload you can think of, and then They are easily deployed on serverless services and even migrate seamlessly between different cloud services. These seemingly “magical” abilities are sparks of innovation that can be collided when a standardized and extensible “application model” meets a Serverless platform.

Application model + Serverless has gradually become one of the hottest topics in the cloud native ecosystem. You are welcome to join the CNCF Cloud Native Application Delivery Field Group (SIG App Delivery) to promote the cloud computing ecosystem towards an “application-centric” The direction keeps moving forward!

Open-Application-Model-Project:

Currently, OAM specifications and models have actually solved many existing problems, but its journey has just begun.

ref:https://www.alibabacloud.com/blog/why-have-alibaba-started-to-embrace-oam-when-serverless-is-so-popular_596593