In high-concurrency scenarios, should the cache be updated first or the database first?

In large-scale systems, in order to reduce the pressure on the database, a caching mechanism is usually introduced.

Once the cache is introduced, it is easy to cause inconsistencies between the cache and database data, causing users to see old data. In order to reduce the data inconsistency, the mechanism of updating the cache and the database is particularly important.

- In high-concurrency scenarios, should the cache be updated first or the database first?

- Cache aside

- Read through

- Write through

- Write behind

- Summary

In high-concurrency scenarios, should the cache be updated first or the database first?

In large-scale systems, in order to reduce the pressure on the database, a caching mechanism is usually introduced. Once the cache is introduced, it is easy to cause inconsistencies between the cache and database data, causing users to see old data. In order to reduce the data inconsistency, the mechanism of updating the cache and the database is particularly important.

Cache aside

Cache aside, also known as bypass cache, is a commonly used caching strategy.

Cache aside read request: The application first judges whether the cache has the data, the cache hits the data directly, the cache misses the cache penetrates the database, queries the data from the database and then writes it back to the cache, and finally returns the data to the client.

Cache aside write request: First update the database, and then delete the data from the cache. After reading the picture of the write request, some students may have to ask: Why delete the cache and update directly?

Cache aside traps

trap 1: update the database first, then update the cache

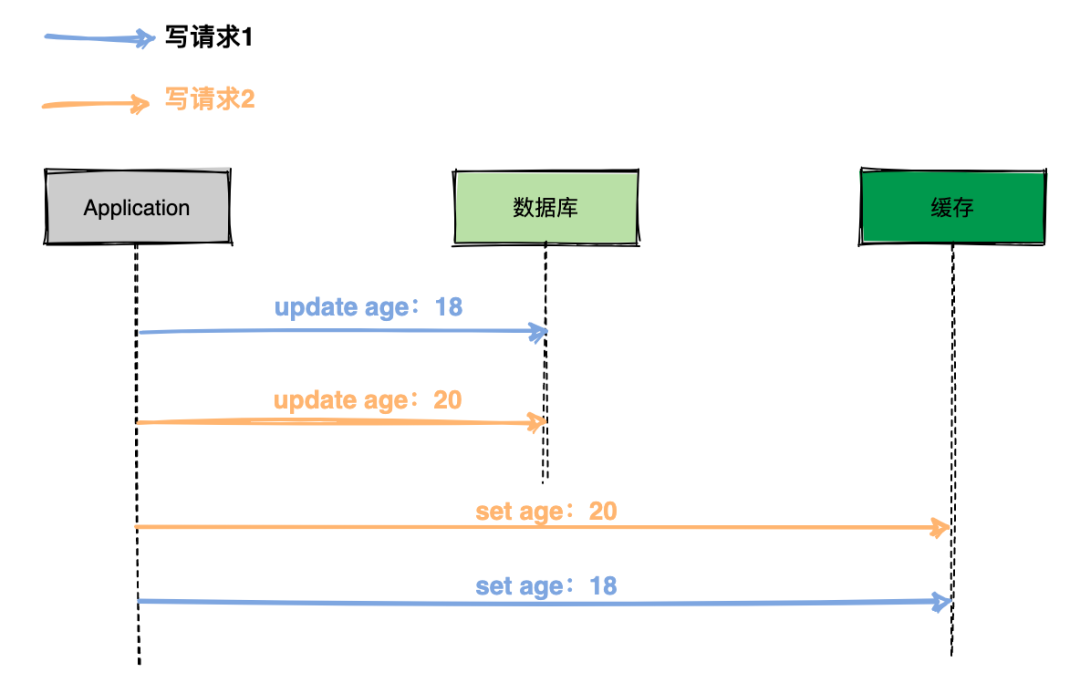

If there are two write requests that need to update data at the same time, each write request updates the database first and then updates the cache. In a concurrent scenario, there may be data inconsistencies.

The execution process as shown above:

(1) Write request 1 to update the database and update the age field to 18;

(2) Write request 2 to update the database and update the age field to 20;

(3) Write request 2 updates the cache, and the cache age is set to 20;

(4) Write request 1 to update the cache, and set the cache age to 18;

After execution, the expected result is that the database age is 20, the cache age is 20, and the result cache age is 18. This causes the cached data to be not up-to-date and dirty data appears.

trap 2: delete the cache first, then update the database

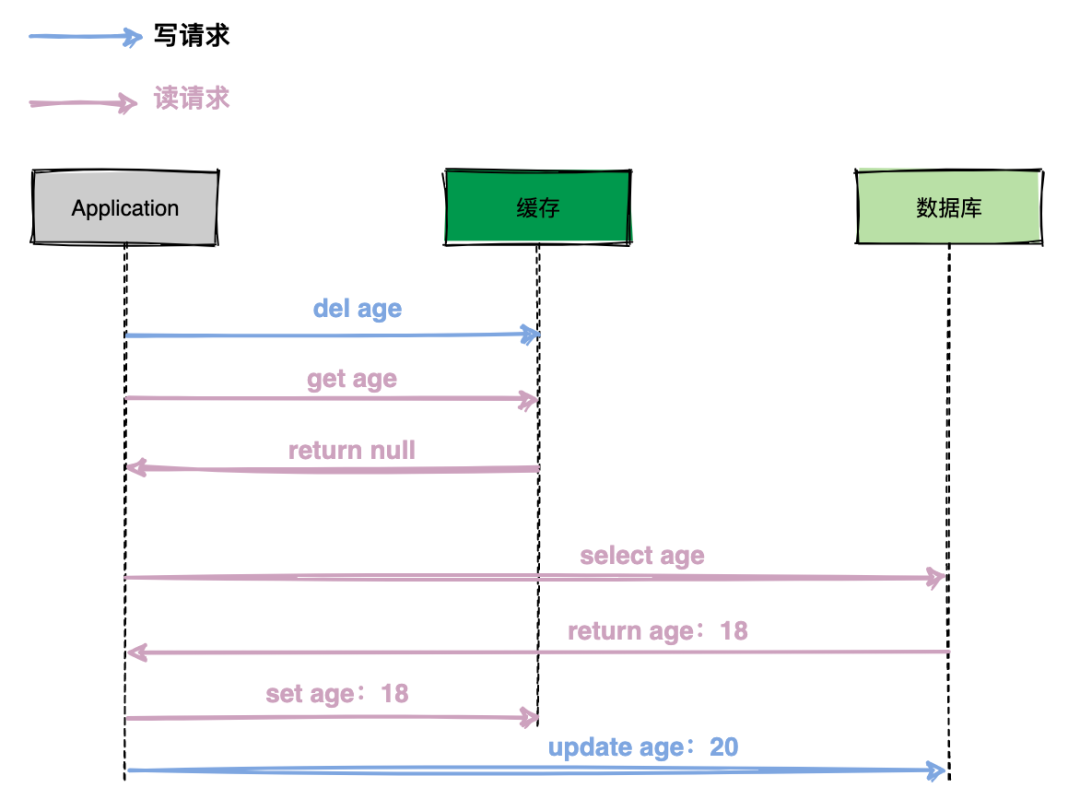

If the processing flow of a write request is to delete the cache first and then update the database, data inconsistencies may occur in a scenario where a read request and a write request are concurrent.

The execution process as shown above:

(1) Write request to delete cached data;

(2) Read request query cache miss (Hit Miss), then query the database, and write the returned data back to the cache;

(3) Write request to update the database.

Throughout the process, it is found that the age in the database is 20 and the age in the cache is 18, and the cache and database data are inconsistent, and the cache has dirty data.

trap 3: update the database first, and then delete the cache

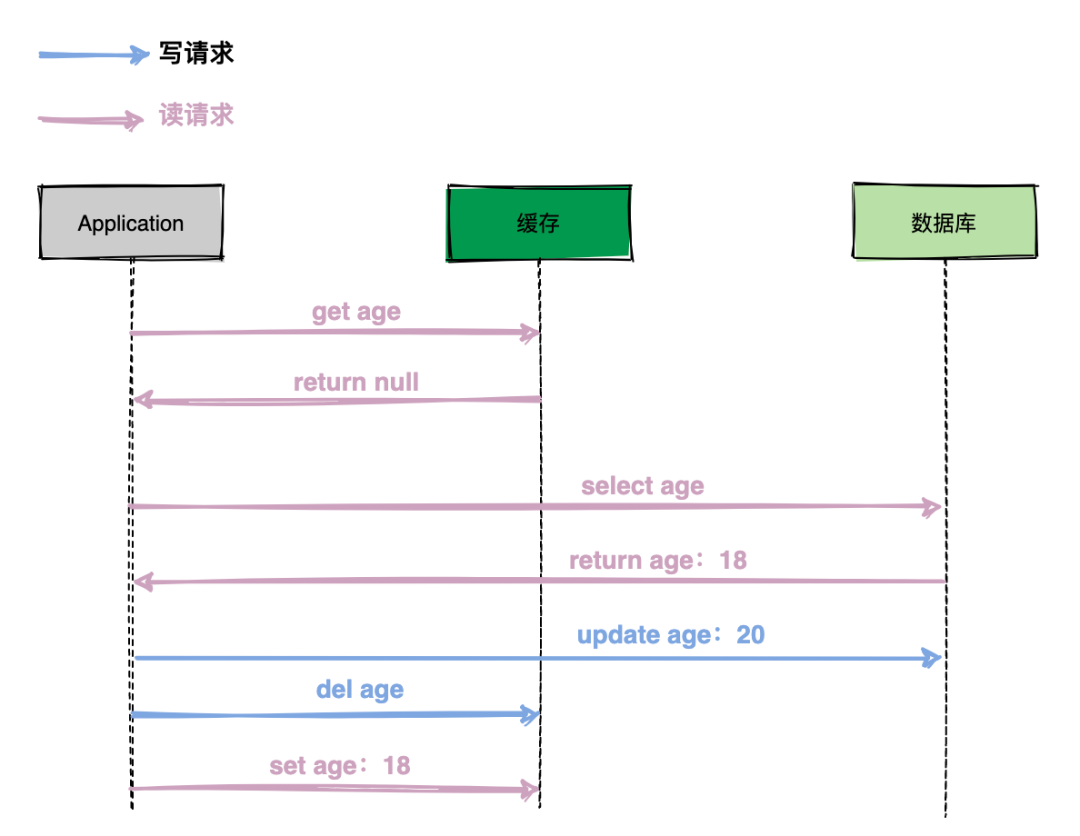

In the actual system, it is recommended to update the database and then delete the cache for write requests, but there are still problems in theory. Take the following example to illustrate.

The execution process as shown above:

(1) The read request queries the cache first, and the cache misses, the query database returns the data;

(2) Write request to update the database and delete the cache;

(3) Read request write-back cache;

Through the operation of the entire process, it is found that the database age is 20 and the cache age is 18, that is, the database and the cache are inconsistent, causing the application to read data from the cache as old data.

But if we think about it carefully, the probability of the above problems is actually very low, because usually database update operations take several orders of magnitude longer than memory operations. The last step in the figure above writes back to the cache (set age 18) is very fast, usually Complete before updating the database.

What if this extreme scene appears? We have to think of a solution: cache data set expiration time. Usually in the system, a small amount of data can be allowed to appear inconsistent for a short time.

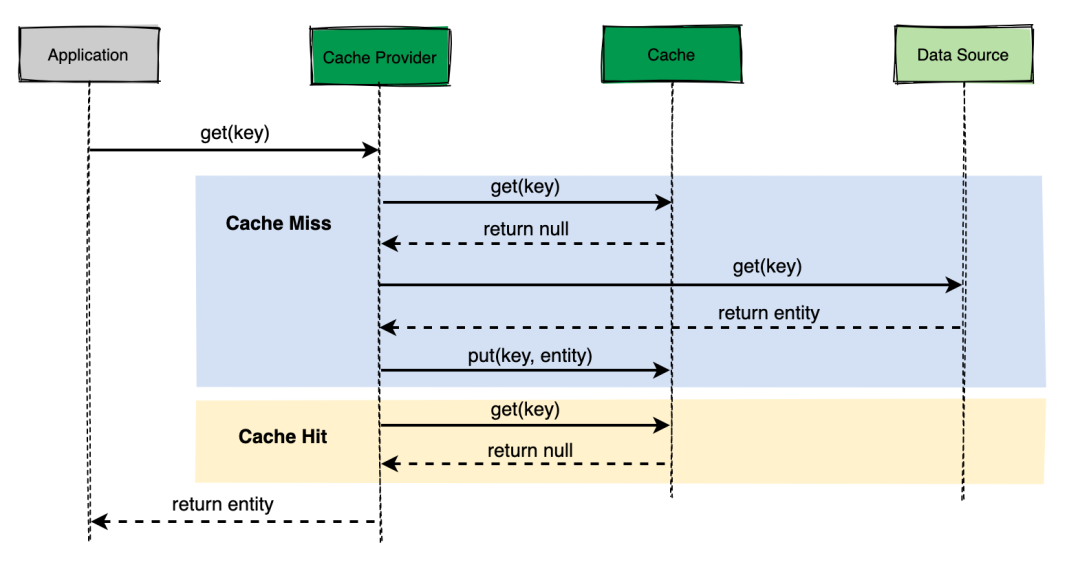

Read through

In the update mode of Cache Aside, the application code needs to maintain two data sources: one is the cache and the other is the database. Under the Read-Through strategy, the application does not need to manage the cache and the database, but only needs to delegate the synchronization of the database to the Cache Provider. All data interaction is done through the abstract cache layer.

As shown in the figure above, the application only needs to interact with the Cache Provider, and does not care whether it is fetched from the cache or the database.

When a large number of reads are performed, Read-Through can reduce the load on the data source, and also has a certain degree of flexibility against the failure of the cache service. If the cache service is down, the cache provider can still operate by going directly to the data source.

Read-Through is suitable for scenarios where the same data is requested multiple times. This is very similar to the Cache-Aside strategy, but there are still some differences between the two. Here again:

In Cache-Aside, the application is responsible for obtaining data from the data source and updating it to the cache. In Read-Through, this logic is usually supported by an independent Cache Provider.

Write through

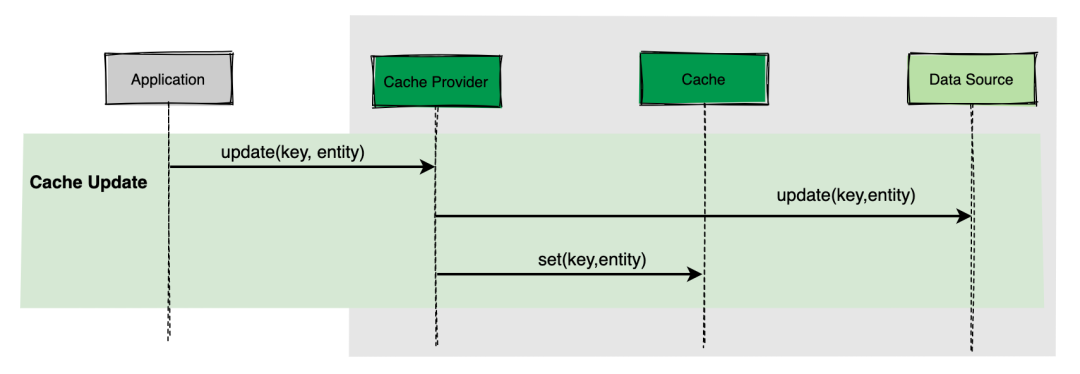

Under the Write-Through strategy, when a data update (Write) occurs, the cache provider Cache Provider is responsible for updating the underlying data source and cache.

The cache is consistent with the data source, and always reaches the data source through the abstract cache layer when writing.

Cache Provider acts like a proxy, this is the work flow of Write through:

Write behind

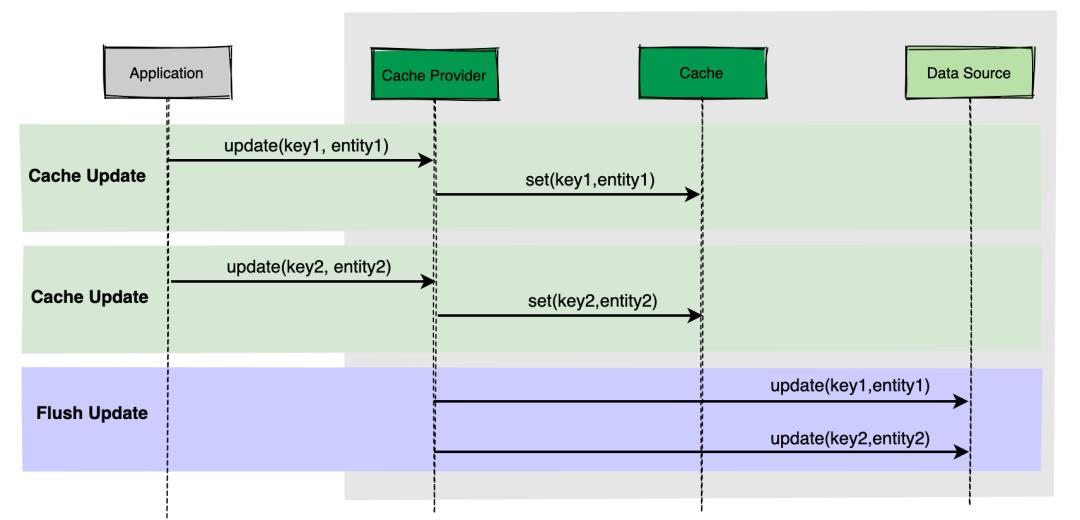

Write behind is also referred to as Write back in some places. The simple understanding is: when the application updates data, only the cache is updated, and the Cache Provider refreshes the data to the database at regular intervals. To put it plainly is to delay writing.

As shown in the figure above, the application updates the two data, Cache Provider will immediately write to the cache, but will be written to the database in batches after a period of time.

This approach has advantages and disadvantages:

The advantage is that the data writing speed is very fast, which is suitable for frequent writing scenarios. The disadvantage is that the cache and the database are not strongly consistent, and should be used with caution in systems that require high consistency.

Summary

After learning so much, I believe everyone has a clear understanding of the cache update strategy. Finally, I will briefly summarize.

There are three main strategies for cache update:

Cache aside

Read/Write through

Write behind

Cache aside usually updates the database first, and then deletes the cache. In order to find out, it usually sets the data cache time. Read/Write through is generally provided by a Cache Provider for external read and write operations, and applications do not need to perceive whether the operation is cache or database. The simple understanding of Write behind is to delay writing. Cache Provider enters the database in batches at regular intervals. The advantage is that the application write speed is very fast.