Dropbox Bandaid microservice reverse proxy/Sevice Mesh proxy analysis

Dropbox Bandaid microservice reverse proxy/Sevice Mesh proxy analysis

With the widespread popularity of microservice architecture, many companies will use or develop their own API Gateway, and even use Service Mesh for internal services. Recently, I saw an article from Dropbox introducing its internal service proxy Bandaid: Meet Bandaid, the Dropbox service proxy. I have to say that the design details are always its unicorn style, which is very rewarding, and there is also a certain way to improve our own designed proxy. Here is a simple post-reading note. (Dropbox was listed on the U.S. stock market not long ago. It is one of the few steadily rising stocks, I personally invested some money in it. Another sentence: The stock market is risky, and investment must be cautious.)

The background of the Bandaid Permalink

Bandaid has evolved from the company’s internal reverse proxy service and is implemented in Golang. There are many mature solutions for reverse proxy, and the main reasons for choosing self-development that technology are as follows:

Better integration with internal infrastructure.

Can reuse the company’s internal basic library (better integration with internal code).

Reduce external dependence, the team can flexibly develop on demand.

More suitable for some special use scenarios in the company.

Most of the above factors are basically the same as our considerations when developing microservice components. This was also our concern when we did not use Go kit toolkits to transform the architecture of microservices. Of course, there were no such tool chains at that time.

Features of Bandaid Permalink

Support multiple load balancing strategies (round-robin, least N random choices, absolute least connection, pinning peer)

Support https to http

Upstream and downstream support http2

Routing rewrite

Cache request and response

Logical isolation at the host level

Configure hot reload

Service discovery

Routing information statistics

Support gRPC proxy

Support HTTP/gRPC health check

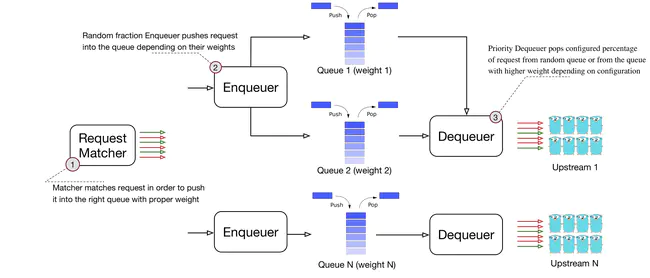

Traffic supports distribution by weight and canary testing

Full of load balancing strategies, as well as support for HTTP/2 and gRPC examples highlights. You know, nginx only supports gRPC from 1.13.10. On the other hand, from the perspective of supported features, if the requirements are not particularly high, it is also quite good to directly use it as a Service Mesh. And because it is developed using Go, for the team that is already using Go as the technology stack, whether it is using or secondary development, the threshold and learning cost are very low.

Bandaid design analysis Permalink

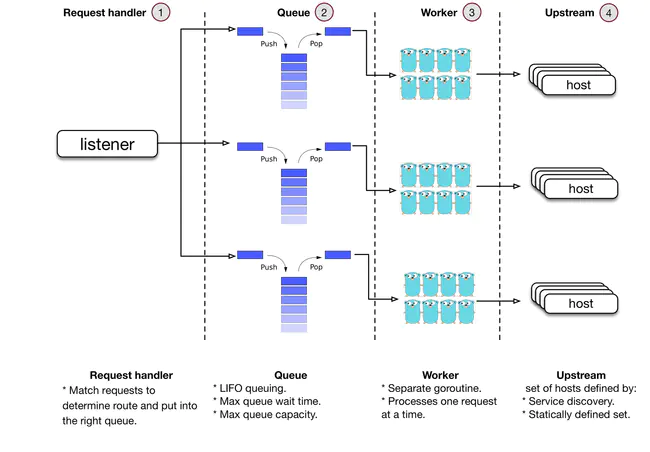

Request queue design Permalink

The received request is processed according to LIFO last in first out method. This design is a bit counter-intuitive, but it is reasonable: In most cases, the queue should be empty or nearly empty. Therefore, LIFO’s strategy does not deteriorate the maximum waiting time of the queue.

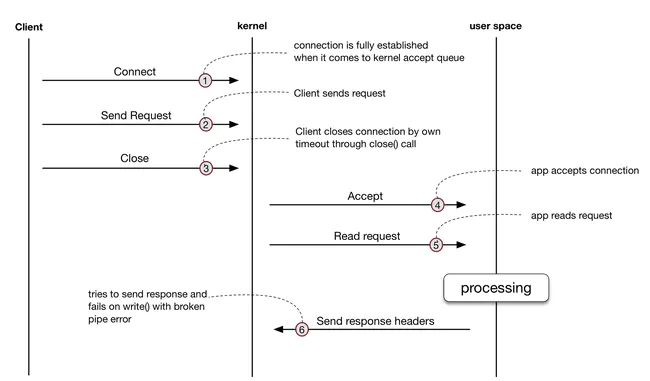

According to the service type, the priority and length of the queue can be configured. It is very convenient to implement service current limiting, service degradation and fuse. Bandaid adopts the strategy of always receiving TCP connections and handing over the connections to user mode management. Combining LIFO has a big advantage: Compared with the kernel mode management connection, if the client accidentally closes the TCP connection after sending the request, Bandaid cannot get the error right away. The error will be triggered when the request is read, and then the response is written after the request is processed. Found that the connection has actually been closed. Therefore, processing such requests is unnecessary consumption of server resources. With LIFO and user mode management connections, Bandaid can drop such requests to a certain extent according to the configured timeout strategy, reducing the number of processing such “dead requests”.

Worker design Permalink

Worker adopts a fixed-size work pool design. On the one hand, it can precisely control the number of concurrency. On the other hand, it also avoids the overhead of frequently creating workers. However, the article also admits that the size of the pool needs to be carefully considered in conjunction with the business when setting it, otherwise the server resources may not be fully utilized, and the service degradation may be triggered by mistake. Workers support processing according to priority and weight when processing requests in the queue. Therefore, canary release and logical upstream isolation can be realized very easily.

Load balancing strategy Permalink

RR: just like evenly sprinkle pepper in your spaghetti. The advantage is that it is simple enough, but the disadvantage is that the number of different back-end service instances, and the difference in interface processing time are not considered, which will cause a large number of Bandaid service resources to be consumed by a very small number of slow services and interfaces.

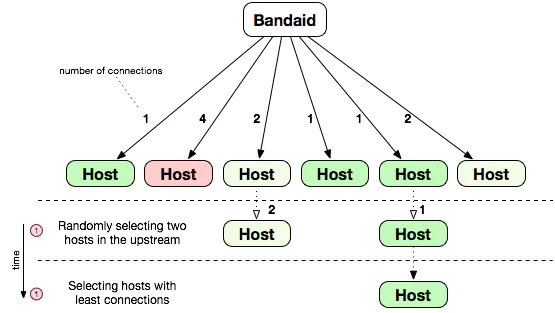

Least N Random choices: First randomly select N candidate upstream hosts, and then select the host with the least number of connections (think of the lowest current load) as the final target host.

This method works well most of the time, but it will fail for small services that fail quickly. Because this service has a high probability of being selected, it does not mean that its current load is low. The way to mitigate this strategy is absolute least connection.

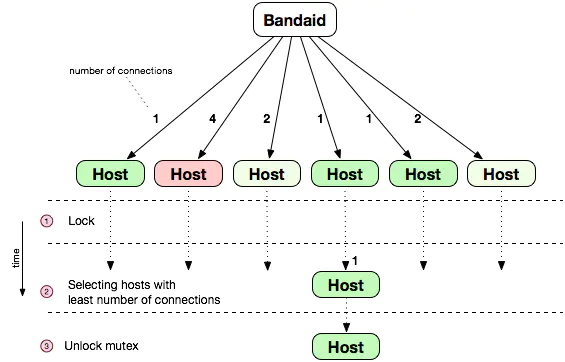

Absolute Least Connection: Select the host with the least number of connections from the global host as the target host.

Summry Permalink

From the perspective of Bandaid’s design, whether it is a reverse proxy or a service mesh, it has good potential. However, the Dropbox team has not disclosed Bandaid performance test data, and the code has not yet been open source. Therefore, we may have to wait for a while for fully explore the Bandid.

ref: liudanking.com